accesses since April 3, 2007

accesses since April 3, 2007

copyright notice

copyright notice

accesses since April 3, 2007

accesses since April 3, 2007

Hal Berghel

You've noticed that phishing is ubiquitous now. Like the Nigerian 411 scam it seems to pervade every aspect of our personal and professional online life. By some accounts, losses due to phish scams exceed $1billion and affect millions of victims. Have you ever wondered why this is so? I'll explain some of these phish phactors below.

One thing that accounts for phishing's persistence despite universal investigation by IT security organizations and law enforcement is that it is a blend of three elements, each of which relates to a different aspect of human experience: technical subterfuge, perception management and social engineering. Phishing shares the technical subterfuge dimension with hacking and computer viruses. It shares perception management with credit card skimming, 3 card monte and a host of confidence games. It shares social engineering with gaining illegal entry to a secure facility by “tailgaiting” behind a legal access or finding passwords taped to the bottom of desk drawers. The fact that phishing is three-dimensional makes it hard to defeat because the exploit is a simultaneous attack against our technical understanding, our general propensity to believe what we see, and our conviction that we can't be fooled easily. Phishing is literally “triple-threat” cybercrime.

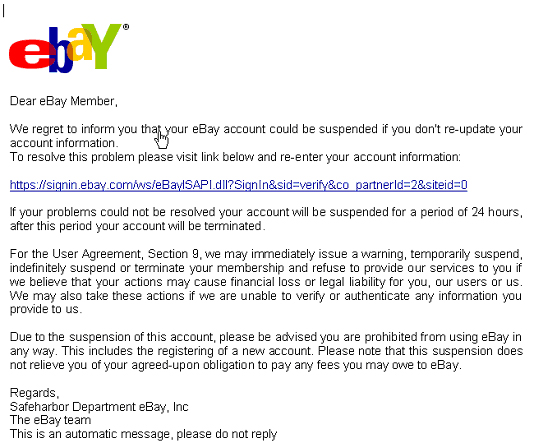

Figure 1: Typical Phishing Attack

A more-or-less typical fishing exploit appears in Figure 1. In this case the Phish is an email (technical subterfuge) with an apparent invitation by an online auction house to update account information (perception management). The victim is enticed to do so because it is natural to want to ensure that one's personal accounts are accurate (social engineering).

Naturally, from the IT perspective the most interesting dimension of phishing is technical subterfuge. One useful way of characterizing the technical aspect is by drawing a distinction between the strategy and tactic of the particular attack. Consider:

Of course this is only a partial list, but it illustrates the variety of phishing attacks that are in circulation – each category of which exploits a different weakness in our technical understanding of the way Internet services work.

I'll illustrate the principle with a simple example of URL spoofing. The original versions of Internet Explorer had a unique characteristic that was readily and easily exploited by phishers: it ignored everything in a URL that followed <ESC> that is represented as “%01%00” in the query string. In light of this observation, compare the following three URLs :

The Actual URL in an phishing email :

http://www.trusted-site.com%01%00@fakesite.com/fakepage.html

The URL that the user sees:

The URL that the browser connects to

http://fakesite.com/fakepage.html

This isn't rocket science, but one must admit that it's clever. The fact of the matter is that the designers of Internet Explorer never anticipated anyone entering an escape sequence in a URL, so it didn't look for it – to it's users cost. Here the technical subterfuge not only took in users but also software designers. This is a simple example of script embedding.

A similar situation arises with URL or Domain spoofing Let's investigate how we might play with a URL in such a way that the unsuspecting user wouldn't notice. There are three basic cases to consider: where we add, substitute, or delete some elements that makes the URL in the Phish email subtly different that the correct URL. Consider the following three cases.

Note that the domain tail of the first fake URL is “validation.co.uk” – that's the domain that appears in the Internet domain registry. Obviously this doesn't have anything to do with Barklayze bank. In the second fake, the subtle difference is the substation of a one for an “L” – they look the same in the browser window, but the link is very different. The domain in this case is some “A-O-One” not AOL as we would have guessed. I haven't seen an example of deletion in the wild yet, but you can rest assured that it's out there someplace.

Let's return to our original example for a deeper analysis. We'll call this a “phish phillet.” (sorry, but I've been waiting a long time for the right moment to use this phrase.”) In any case, the way to develop skill in this area is to click on “view source” in your mailer. If you use a basic Pop-3 or IMAP mailer like Eudora this is simpler. Some more sophisticated mailers like Notes will suppress scripts, MIME inclusions, etc. and may make the analysis harder.

When I view the source of the Phish bait above, I get the following:

<x-html>

<html><p><font face="Arial"><A HREF="https://signin.ebay.com/ws/eBayISAPI.dll?SignIn&sid=verify&

co_partnerId=2&siteid=0"><map name="xlhjiwb"><area coords="0, 0, 646, 569" shape="rect" href="http://218.1.XXX.YYY/.../e3b/"></map><img SRC="cid:part1.04050500.04030901@support_id_314202457@ebay.com" border="0" usemap="#xlhjiwb"></A></a></font></p><p><font color="#FFFFF3">Barbie Harley Davidson in 1803 in 1951 AVI

</x-html>

Look at this closely. The first thing that comes to mind is that there is one humongous image map in this email that is 646 by 569 elements in size. One can confirm that this is essentially the entire email. So what appears as a logo and text, is actually a graphic. This is like mailing a photograph of a letter to someone. This alone should raise a red flag.

Second, even if we allow that an image map might be useful, “ xlhjiwb" is an exceedingly strange name for it. It violates the principle of programming mnemonics whereby you name files and variables in such a way that it's use is easiest for another programmer to understand. Flag #2.

Third, what does Barbie, Harley Davidson, 1803, 1951 and AVI have to do with this email or the online auction? Well, most spam filters use Bayesian analysis to detect spam. Basically, the email is statistically analyzed and prioritized as a result of keyword and key phrase analysis. So email with three occurrences of Viagra and two of Cialis or Ciali$ are likely to be labeled spam. At this point, no spam filter that I know of has it in for Barbie or Harleys, so the presence of these words and phrases suggests an overt attempt to fool the Bayesian analyzer. Flag #3

Next, if we click on the link, where will it take us. The source shows clearly that it will take us to an IP address starting with 218 ( China ). What is the likelihood that the auction house has their server farm in China ? Flag #4

Finally, and this is the most subtle of all, note the presence of “…” in the link. That's not dots of laziness. That's a subdirectory on a Unix server. “.” and “..” are reserved in Unix, but “...” is fair game for a subdirectory. This suggests that the server may be compromised. If the owner reviews the root directory, he will see something like

>ls

.

..

…

At the top of the directory list. To the unsuspecting, the three dot subdirectory will go unnoticed. Flag #4

There's one more cue that something is awry. See if you can find it.

CONCLUSION

Most Phish frequent the shallows. Typically, they

Although these have been around long enough that few still fall for them, they were very effective when first launched. The variations on these themes are limited only by the cybercrook's imagination.

It is more useful to consider phish bait in the abstract, as these variations are limitless. Here are some guidelines on what to look for in future phish bait:

So, that's what you have to look for. Good phishing!

b.t.w., the last cue is the appearance and location of the cursor. Note that the hand betrays that it's an active link, though it's not even close to the presumed anchor in the email. That suggests that the real anchor is actually the entire email.

Hal Berghel is (among other things) Director of the Center for CyberSecurity Research (ccr.i2.nscee.edu) and Co-Director of the Identity Theft and Financial Fraud Research and Operations Center ( www.itffroc.org ). He also owns a security consultancy, Berghel.Net.